There is a wealth of information available online, but many of our concerns are about the world around us. That’s why we started working on Google Lens: to put the answers right where the questions are, and to give you more ways to interact with what you see. We introduced Lens in Google Photos and

There is a wealth of information available online, but many of our concerns are about the world around us. That’s why we started working on Google Lens: to put the answers right where the questions are, and to give you more ways to interact with what you see.

We introduced Lens in Google Photos and the Assistant last year. People are already using it to answer a variety of questions, particularly those that are difficult to describe in a search box, such as “what type of dog is that?” or “what’s the name of that building?”

We announced today at Google I/O that Lens will be available directly in the camera app on supported devices from LGE, Motorola, Xiaomi, Sony Mobile, HMD/Nokia, Transsion, TCL, and OnePlus, BQ, Asus, and, of course, the Google Pixel. We also announced three updates that will allow Lens to answer more questions about more things in less time:

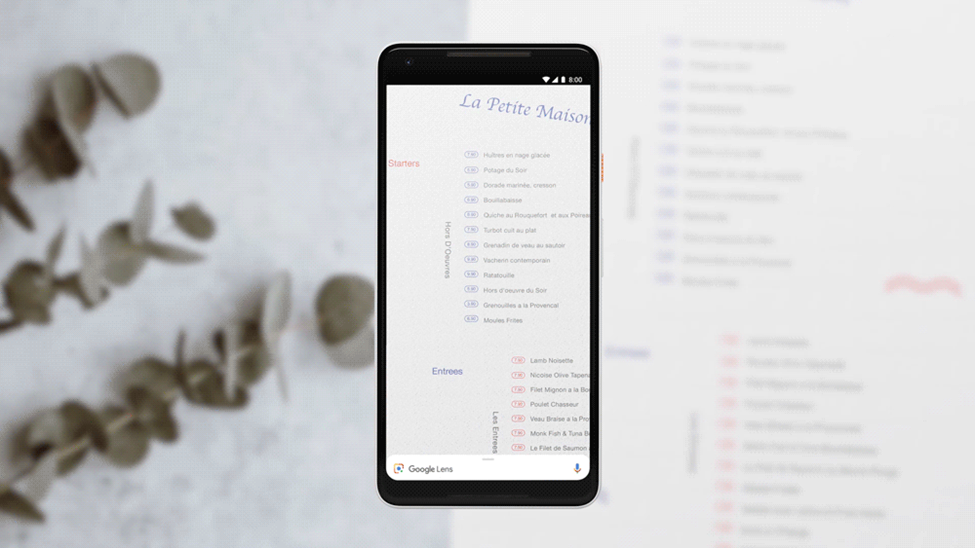

First, smart text selection connects the words you see with the answers and actions you need. You can copy and paste text from the real world—like recipes, gift card codes, or Wi-Fi passwords—to your phone. The lens helps you make sense of a page of words by showing you relevant information and photos. Say you’re at a restaurant and see the name of a dish you don’t recognize—Lens will show you a picture to give you a better idea. This requires not just recognizing the shapes of letters, but also the meaning and context behind the words. This is where all our years of language understanding in Search help.

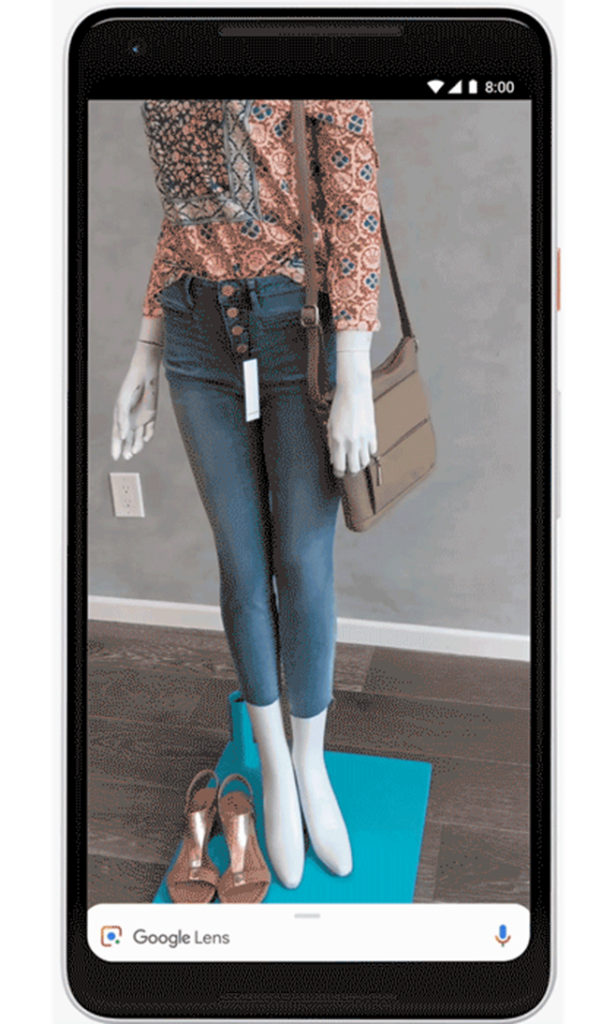

Second, your question isn’t always, “What exactly is that thing?” rather than “what are things like it?” With style search, if an outfit or home decor item catches your eye, you can open Lens and not only get information on that specific item (such as reviews), but also see items in a similar style that fit the look you like.

Third, Lens now functions in real-time. It is capable of proactively surfacing information in real-time—and anchoring it to what you see. You’ll be able to explore the world around you simply by pointing to your camera. This is only possible with cutting-edge machine learning, which uses on-device intelligence as well as cloud TPUs to identify billions of words, phrases, places, and things in a fraction of a second.

Magazine

Magazine

Leave a Comment

Your email address will not be published. Required fields are marked with *